https://colah.github.io/posts/2015-08-Understanding-LSTMs/

RNN is another paradigm of neural network where we have difference layers of cells, and each cell not only takes the cell from the previous layer as input, but also the previous cell within the same layer. This gives RNN the power to model sequence.

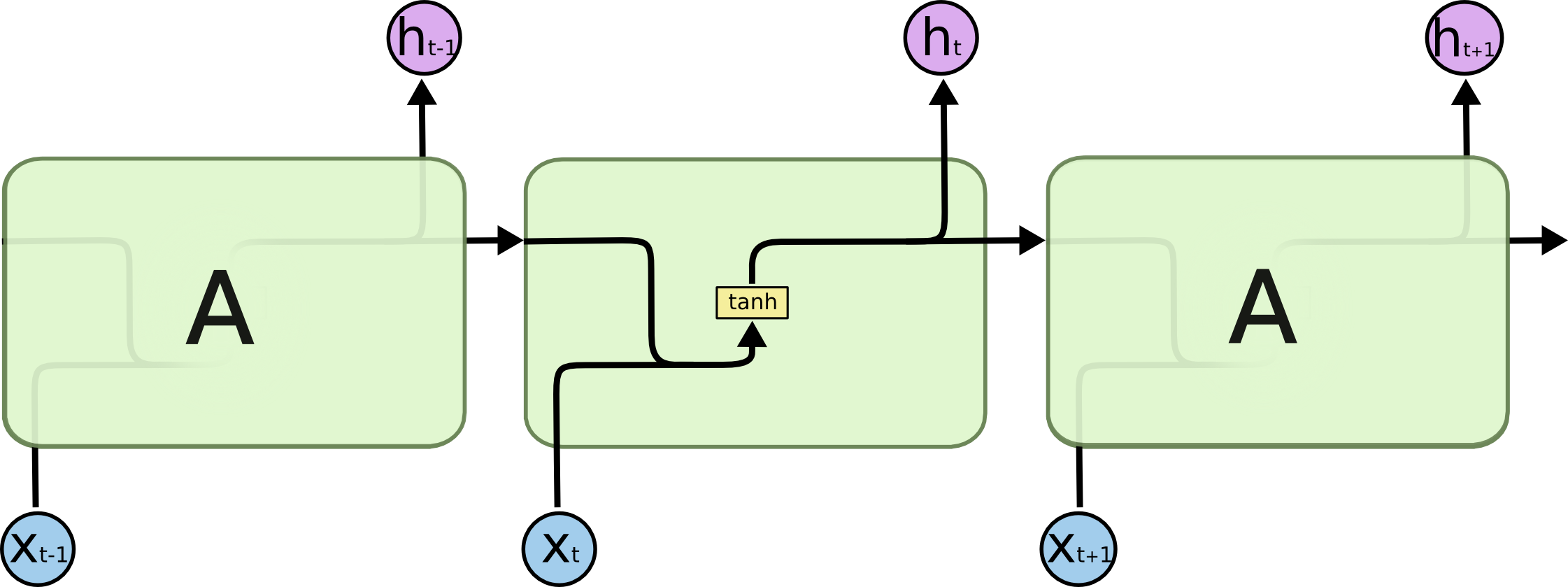

A recurrent neural network can be thought of as multiple copies of the same network, each passing a message to a successor. Consider what happens if we unroll the loop:

All recurrent neural networks have the form of a chain of repeating modules of neural network. In standard RNNs, this repeating module will have a very simple structure, such as a single tanh layer.

- , where the current hidden state is a function of the previous hidden state and the current input . The are the parameters of the function .

Summary

- RNN for text, speech and time series data

- Hidden state aggregates information in the inputs .

- RNNs can forget early inputs.

- It forgets what it has seen eraly on

- if it is large, is almost irrelvent to .

Number of parameters

- SimpleRNN has a parameter matrix (and perhaps an intercept vector).

- Shape of the parameter matrix is

- Only one such parameter matrix, no matter how long the sequence is.